TensorFlow 实现的扩大循环神经网络

总所周知的,用循环神经网络学习长序列是一个困难的任务。有三个主要挑战:1)提取复杂的依赖关系,2)梯度的消除和爆发,3)高效的并行化。在本文中,我们介绍一种简单而有效的RNN连接结构,即DILATEDRNN,同时解决所有这些挑战。

论文地址:https://arxiv.org/abs/1710.02224

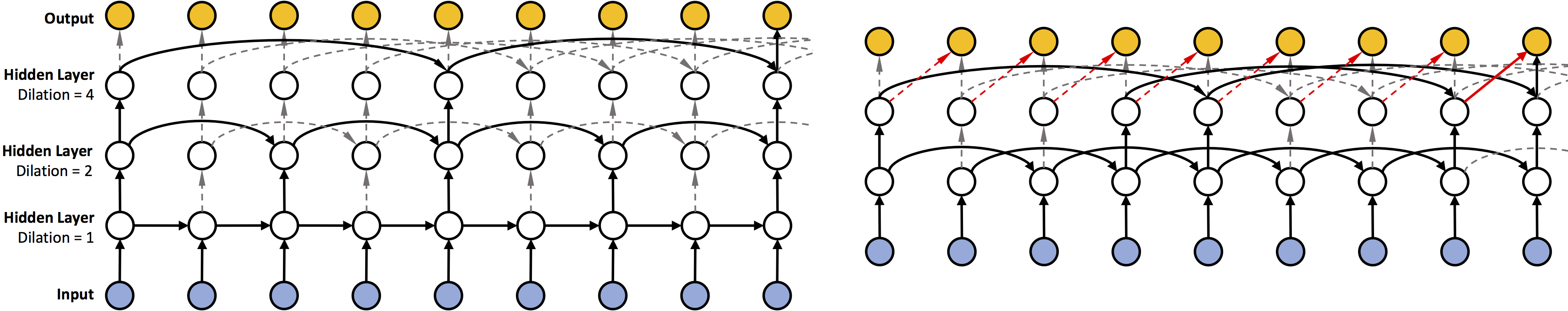

Notoriously, learning with recurrent neural networks (RNNs) on long sequences is a difficult task. There are three major challenges: 1) extracting complex dependencies, 2) vanishing and exploding gradients, and 3) efficient parallelization. In this paper, we introduce a simple yet effective RNN connection structure, the DILATEDRNN, which simultaneously tackles all these challenges. The proposed architecture is characterized by multi-resolution dilated recurrent skip connections and can be combined flexibly with different RNN cells. Moreover, the DILATEDRNN reduces the number of parameters and enhances training efficiency significantly, while matching state-of-the-art performance (even with Vanilla RNN cells) in tasks involving very long-term dependencies. To provide a theory-based quantification of the architecture’s advantages, we introduce a memory capacity measure – the mean recurrent length, which is more suitable for RNNs with long skip connections than existing measures. We rigorously prove the advantages of the DILATEDRNN over other recurrent neural architectures.

项目地址:https://github.com/code-terminator/DilatedRNN

Getting Started

The light weighted demo demonstrates how to construct a multi-layer DilatenRNN with different cells, hidden structures and dilations for the task of permuted sequence classification on MNist. Although most of the code is straightforward, we would like to provide examples for different network constructions.

Below is an example that constructs a 9-layer DilatedRNN with vanilla RNN cells. The hidden dimension is 20. And the number of dilatation starts with 1 at the bottom layer and ends with 256 at the top layer.

cell_type = “RNN”

hidden_structs = [20] * 9

dilations = [1, 2, 4, 8, 16, 32, 64, 128, 256]

The current version of the code supports three types of cell: “RNN”, “LSTM”, and “GRU”. Of course, the code also supports the case where the dilation rate at the bottom layer is greater than 1 (as shown on the right hand side of figure 2 in our paper).

An example of constructing a 4-layer DilatedRNN with GRU cells is shown below. The number of dilations starts at 4. It is worth mentioning that, the number of dilations does not necessarily need to increase with the power of 2. And the hidden dimensions for different layers do not need to be the same.

cell_type = “GRU”

hidden_structs = [20, 30, 40, 50]

dilations = [4, 8, 16, 32]

Tested environment: Tensorflow 1.3 and Numpy 1.13.1.

Final Words

That’s all for now and hope this repo is useful to your research. For any questions, please create an issue and we will get back to you as soon as possible.

更多机器学习资源:http://www.tensorflownews.com/